Trend towards gender differentiation in artificial intelligence

— In figure 1 there are two smart robots, boy or girl? What is it?

"But what are we talking about?" How is it possible that artificial intelligence tends to differentiate genres? Machines have no gender, don’t they?”

Indeed. Machine intelligence is artificial intelligence (AI) or the machine. It is not new, because many years have passed since 1956, when Samuel, Simmons, Newell, MacArthy and Minsky declared artificial intelligence as a line of investigation. Since then, technology has been greatly advanced. Understanding natural language, playing successfully in games that require complex strategies (chess, Go game), developing autonomous driving systems and optimally planning channels in multipoint distribution networks are some of the advances that have been possible thanks to AA. Statistical methods and symbolic formalisms are used for this purpose, among others. Machine learning is what underpins the current AA revolution. These studies are methods that derive from the knowledge of thick databases and are used by agencies of companies and governments, hospitals and other companies [2] for diverse applications. For example, identify objects in video sequences, assess the credibility of loan applications, look for errors in legal contracts or find the most appropriate treatment for a person with cancer [1] [2].

AA is already in our day to day, many of the applications we carry on our mobile phones are based on artificial intelligence techniques (solutions) and we use them normally. For example, it is increasingly common to use oral communication to communicate with phones and computers: Amazon offers Alex, Google Home, Apple offers Siri and Microsoft Cortana. These are the voice servers, the tools that allow us to know, process and answer the question or request made, once the search is done on the Internet, through voice synthesis.

We are aware that, due to the trends of the years, society itself is not neutral and that trends in gender differentiation (and others) are often patent. For example, the role of care is attached to women and force-related roles to men. But what about artificial intelligence? If artificial intelligence and machine learning evolve, you risk integrating these trends into the tools of the future. And it is already happening.

Data source of problems

Machine learning algorithms make predictions as learned. The algorithms themselves have no tendency of gender, race or other. But as mentioned, they are based on data, mathematics and statistics. The trend is in data, which are a reflection of society and are the fruit of our decisions. In the criteria that people use for hiring candidates, in the evaluation of student reports, in the realization of medical diagnoses, in the description of objects, in all of them we have tendencies of differentiation between culture, race, education and others [5], and among them, of course, gender differentiation. In short, the models created will be as good or neutral as the data used for training at best. We collect and label data, reflect our subjectivity in data, leading to trends in systems developed through machine learning. Moreover, artificial intelligence amplifies stereotypes! [2]. In fact, when we select the most suitable model from machine learning algorithms, we choose the one that best suits the data or the one that has the highest rate of invention, leaving aside other criteria.

The problem is serious, especially in those cases in which it is used for decision-making what is proposed by machines, something that happens frequently in everyday life, since tools based on artificial intelligence are widespread [4].

Some examples of trends in gender differentiation

If we go back about fifteen years, when the first developments to know the speech were implanted in the cars, the systems did not understand the female voices, since they trained with those of the men. Although progress has been made in these systems in recent years, and they know the voices of women and men, the gender trend remains evident. In line with the voice servers we just mentioned, three out of four have a woman's name and, even more, all have a woman's voice by default. Although today it can be changed, by default the obedeters who make servers are feminine.

Why? The reason is simple because we expect women in administrative tasks and in care or services. Doing a survey would not be surprising if the majority prioritized the female voice.

The trend towards gender differentiation does not only appear in voice-related contexts. In the processing of natural language, for example, for semantic analysis of texts, thick dictionaries are used, called word-embedding, which are extracted from large sets of texts and which relate words to one another. A study by researchers at the University of Boston [2] has shown that these dictionaries can produce inferences of the type “John writes better programs than Mary”. Logically, this is a consequence of the texts used to create them.

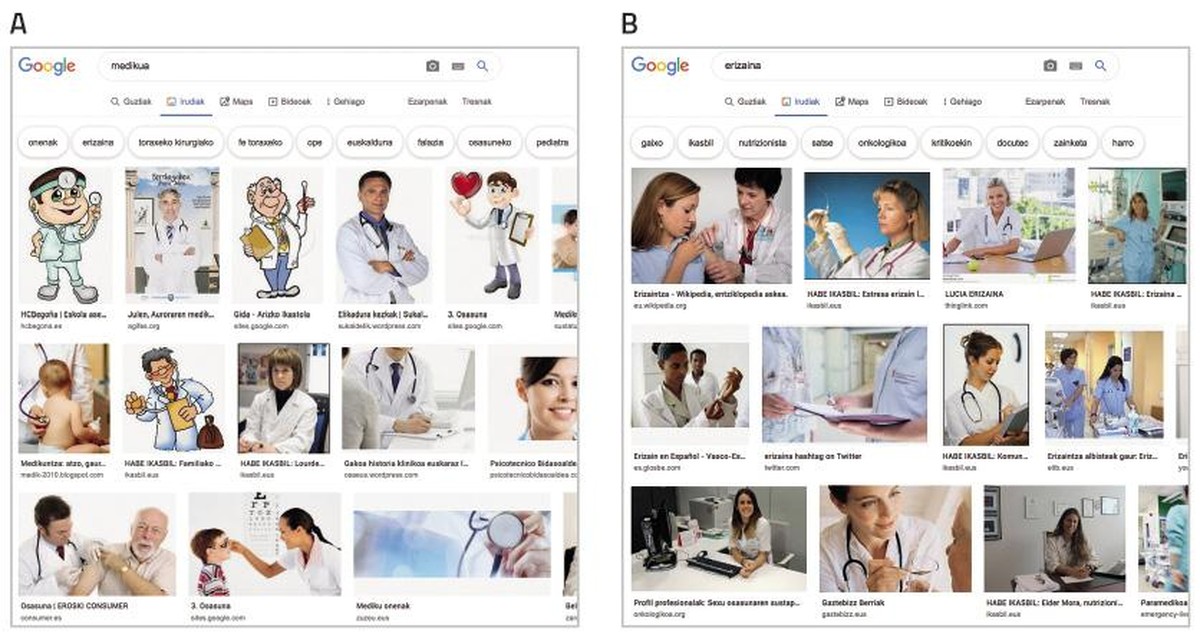

In an image-dominated society, artificially learning systems increasingly feed on image. The well-known ImSitu datasets (http://imsitu.org/) and COCO (http://cocodataset.org/) contain more than 100,000 images from the network, labeled with explanations of complex scenes [3]. Both in one case and another, there are more male figures, and it is noted that labels of objects and actions tend to differentiate genres. In the COCO dataset, cookware (spoons and forks) are described related to women, while equipment for outdoor sports (snow boards and tennis rackets) are associated with men. The same happens in the ImageNet database, created between 2002 and 2004 with Yahoo News images, and still widely used. 78% of the images are represented by men and 84% of them with white bark, as George W. Bush is the person who appears the most. As these datasets are used to train image recognition software, the software will inherit the trend. Try, find the “doctor” in the Google images and the screen is filled with men dressed in white aprons. On the contrary, if you are looking for a “nurse”, women will stand out (see figure 2).

Ways to eliminate the trend

In order for proposals for artificial intelligence systems to be reliable, we should first ensure that proposals are objective. Algorithms are unaware and therefore cannot change their proposals.

The way to deal with it is not the only one; it can and must be addressed from many fronts.

On the one hand, the active participation of women in all steps of technological development will be essential. According to statistics, women are increasingly away from 50% in IT jobs. Although change will help, this does not guarantee that gender differentiation trends will disappear in the systems to be created. An example of this is AVA (Autodesk Virtual Agent), a software agent that offers Autodesk customer service worldwide. It combines artificial intelligence with emotional intelligence to speak “face to face” with the client. Although AVA has been created by a mostly female work team, it has a female voice and appearance. The excuse is again the same: people feel the most friendly and collaborative female voices. This example shows that it is not enough for women to join working groups.

One way to eliminate the trends of machine learning systems is through a thorough debugging and correction of the databases used for training. There are researchers who are dedicated to this and who have removed from the texts relations that are not legitimate, for example, men/computers and women/appliances [6]. Other researchers, on the other hand, propose to create differentiated classifiers to classify each of the groups represented in a dataset [7].

It is clear, therefore, that we all have to keep in mind that if data has a certain trend, the trend will be the same for systems that learn from them effortlessly, which can have serious consequences for us. We will need tools to detect and identify trends and principles for creating new datasets. Experts may be needed to do this. And why not develop artificial intelligence tools for that task!