Large to Small 3D Display

In an image or a screen, that is, in any flat element, there are two dimensions. But in real life we see things in three dimensions: we also perceive the depth, that is, we perceive that there are things closer to us and others farther away.

We got that feeling thanks to two slightly separated eyes. The closer one thing is to us, the more different the image of each eye will be, while the two eyes will receive the same image as distant things. This information collected from both eyes is processed by our brain and we collect the idea of location in depth.

3D technology in cinema

As already indicated, we cannot appreciate the depth of the objects found on a screen or image, since it is flat and the whole area is at the same distance. However, the film industry has spent time searching for realism in films, trying to make this third dimension (3D) feel. Although the technology used for this has been evolving and improving over the years, it is definitely about reproducing our vision mechanism: the film is recorded with two separate cameras approximately at the same distance from the eyes and then transmitted to each eye the image of each camera. This is known as stereoscopic technology.

Initially it was made by color filters. The images taken by each camera went through some color filters (one by the red and the other by the blue), then projected the only image that is the sum of both and the spectator put the red and blue filter glasses so that only the image corresponding to each eye arrived. The 3D effect was obtained, but not very ordered, to the detriment of the realism of the colors. This technology is called anaglyphic imaging technology.

However, in recent years other technologies have begun to use that have a significantly better effect and 3D films and cinemas have been widespread (recently also 3D television). The most widely used technologies are eclipse systems and, above all, polarization systems.

Eclipse systems play with the speed of perception of our images. As our eyes perceive 24 images per second, this method emits 48 images per second to the screen by interspersing images taken by both cameras. The glasses are synchronized with the projector and alternatively "darken" the glass of each eye. The disadvantage of this system is that the glasses need batteries and the synchronization system.

In polarization systems (which is the most used in current cinemas), the image taken by each camera is projected with polarized light in a given direction and each glass of the glasses lets only polarized light pass in a given direction, so that each eye only sees the image of a camera.

3D displays

On film screens and televisions it is impossible to send a different image to every eye of all spectators without glasses. On the contrary, in front of the computer screen is usually a single person, usually located at the same angle and at the same distance. Therefore, it is possible to make autosteroscopic screens for this use (the 3D effect is obtained by optical and electronic elements of the screen itself and not by glasses).

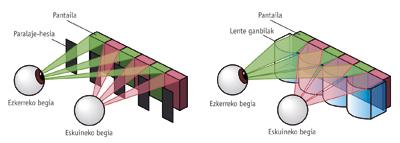

To make each eye see a different image, it is used that everyone sees it from a different angle and that that angle is always similar, and parallel vertical fences or convex lenses are placed for the width of a pixel stuck to the screen. The best known device that uses this system is the Nintendo 3DS console, which has come out this winter.

3D mobile devices

But on other devices we will see more and more. Recently LG has launched its Optimus 3D smartphone with a 3D screen like the ones we mentioned. But not only is it useful to see, but it has two cameras distributed at an approximate distance between the eyes, which allow us to take three-dimensional photos and record videos. And surely many of these phones, tablets, laptops will come out in the coming months with 3D screens and recorders. Other models with 3D screen have also come out, but without the two cameras, or with the two cameras, but without the 3D screen, like the Optimus Pad tablet of the LG itself, so be careful what we sell with the name 3D...

You have to see this trend that goes beyond a fashion of the moment, since more than one thinks that it does not have a practical use... I at least consider very practical the 3D video conferencing demo that some North Carolina researchers have done in the US. The demo was made using 3D screens and Kinect devices like the one we presented the previous month: on one side of the conversation, they have four Kinect distributed in the classroom, which allows to perform the 3D modelling of this room; on the other hand, another Kinect that tracks the eyes of the receiver will show the representation of the other room on a 3D screen, but from a perspective of its movements, as if it really was in the room. And maybe I'm not the only one who has seen practicality, maybe that's why Microsoft, the owner of Kinect, the videoconference company Skype, ... Too conspiracy? We will see!