At the fingertips

Buttons have no special mysteries. They are always in the same place and each has a certain function. In one way or another, pressing the button activates something that performs this function; something that is behind the button. In many cases, in addition, we will see on a screen what we have achieved with this button. In the case of touch screens, on the contrary, it is necessary to act on the screen itself. They have no button or key, only one surface. But how do you know a touch screen where we have placed the finger? What is behind this screen?

In a touch

Not from behind, the key is in the oldest system of touch screens. The infrared system is the oldest and perhaps the easiest to understand. At the edges of the screen there are emitters and receivers forming a network. That is, on two consecutive sides --vertical and horizontal- there are infrared light emitting diodes with opposite receivers. Thus, horizontal and vertical infrared rays form a network on the screen surface. And when the finger or any other object touches the screen cuts at least a horizontal and a vertical beam. The computer only has to detect the broken rays to know where they have touched.

The system is very simple and does not obscure the screen at all, so it does not affect the image quality. These and the advantages of this system are to have very permanent screens. But they are expensive, they occupy a lot of volume and do not support anything the dirt. And they are very sensitive to false pulsations: if a fly was placed on the screen, for example, they would consider it as a pulsation.

At present there are many other technologies for the construction of touch screens. The three most commonly used are resistive, capacitive and surface acoustic wave (SAW) touch screens.

The three most used are resistive contact screens. They are formed by two main layers: on a rigid sheet --like glass - they have another flexible sheet --polyester--. Two plates have a transparent metallic coating - usually of Indian and tin oxide (ITO) - on the inside and are separated by insulating points, leaving a small gap between two sheets. Electrical current is applied along these metal coatings. By pressing the flexible top plate the two conductive coatings are in contact, which causes a change in electrical current. Thus, an electronic controller that measures the resistance change turns this change into x and y coordinates.

In addition to the finger, any object can be used to power the screen, but hard and sharp objects can damage the flexible top plate coating. These screens are also affected by ultraviolet light and lose flexibility and transparency. In addition, they are dust and water resistant, cheap and reliable. Thus, they are currently widely used in industrial applications, businesses, PDAs and other electronic devices.

In this case it is necessary to touch with the bare finger or be a conductor if using a pencil. As already mentioned, capacitive screens offer great brightness and are not affected by external elements. However, signal processing requires a fairly complex electronics that makes these screens more expensive.

Finally, surface acoustic wave screens use a similar infrared system, but instead of using infrared light, ultrasound is transmitted on the screen surface. A transducer emits a horizontal and a vertical acoustic wave. These two waves are reflected in acoustic reflectors for distribution across the screen surface. The waves are not emitted continuously, but pulse, and are detected by some sensors. When a finger is placed, it absorbs the energy from the waves and they weaken. Thus, when detecting the waves attenuated by the sensors, the computer calculates the coordinates of the affected point. In addition, you can calculate z in addition to the coordinates x and y. The higher the pressure, the more energy absorbs the finger.

These screens are the most modern and expensive we have ever seen. Also those that give the clearest image. There is no layer on the screen, so no brightness is lost. And lacking coverage, there is no risk of damage. On the other hand, soft fingers or pencils will be used. Fine and hard objects, such as strong pencils, are useless. And they do not support dirt, dust and water well.

Most of the screens currently used are of these three types. But contact technology doesn't end there. In recent years they have advanced a lot and touch is gaining strength in the world of new technologies.

Ten fingers

"With today's computers it seems that we are all Napoleon and with the left hand in the shirt, but many things are done much better with both hands. Touch screens are a bridge between the virtual and physical worlds." These are the words of Bill Buxton, the father of multitouch screens.

Until recently, touch screens could detect a single pulsation. After all, instead of clicking with a mouse, they offered to click directly with their finger. On multitouch screens there may be more than one finger at a time, more than one hand or more than one person.

The research and development of touch diversity technologies began in the 1980s. But last year, at the 2006 TED Congress, New York University researcher Jeff Han surprised everyone by presenting his own screen. On his screen he showed and manipulated images, as if he really touched his hands, while saying "in fact, we should handle them like that"; and he drew lines with several fingers, handled the virtual keyboards that appeared on the screen, took a satellite image and moved it, expanded it, put it in three dimensions, zoom... all with his hands.

The idea of developing this technology came with a glass of water. He realized that at the points of contact with the forest the light reflected differently. He recalled that in optical fibers light is transported bouncing off the inner walls of the fiber until it leaves the end that can be several kilometers away. This phenomenon is called complete internal reflection.

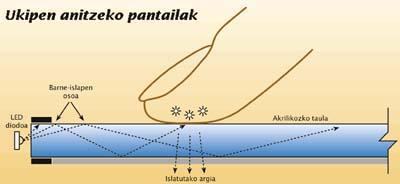

Thus, if on a glass surface the light was transported, as in the optical fiber, and something, like a finger, prevented it, as in the forest, the light would not continue to bounce: one part would absorb the finger and another part would reflect it out. For that is the mechanism that Han used (all the obstructed internal reflection) to develop his touch screen.

He placed LED diodes on one side to an acrylic table and mounted an infrared camera behind the board. Thus, by placing a finger on the table that has the light inside, the light that goes outside reflected is captured by the camera, in a pixelated form. Also, the higher the pressure with your finger, the more information the camera captures. Han's screen turns touch into light. Finally, the shapes and sizes detected by the camera are measured and coordinated by software.

Thanks to this simple and effective mechanism you can build screens of different sizes and applications: interactive whiteboards, tables, digital walls, etc. To do this, he created the company Perceptive Pixel.

But the big companies of new technologies are not asleep either. In May 2007, Microsoft introduced the very similar technology Microsoft Surface table, which it plans to market in spring 2008. The surface of this table is a screen in which images, maps, etc. can be manipulated with hands. And it also has the ability to know the objects that are placed on top. So, for example, to download photos of a digital camera with wifi would be enough to leave the camera on the table. The same with mobile phones...

And precisely on mobile, Apple has launched its own multi-touch technology. The i-phone is a next-generation mobile that features, among other things, a touch screen, such as the i-pod touch version of the company's successful mp3 player.

It seems that we are in a science fiction film, controlling the machines only with hand movement. Remember Tom Cruise on Minority Report? As contact technology is coming to something similar. And yes, all this is real.