Tell me where you publish... and I'll tell you who you are

The progress of science is closely related to communicating results among scientists. This step is essential, since to advance knowledge it is necessary to rely on the results obtained previously by other researchers.

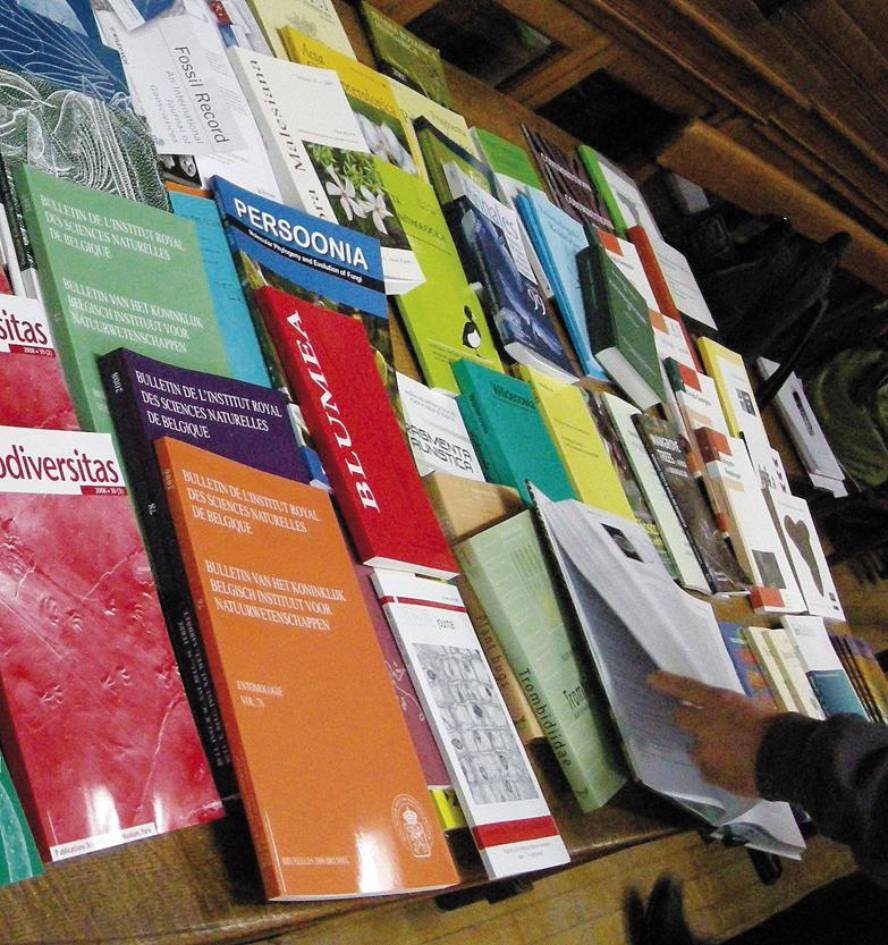

Scientific results are published in highly specialized journals, with a wide variety of such journals in each scientific field. To maintain a certain security and control over the correctness of the scientific results published in the journal, and therefore reliability, each journal has established a peer review system by equivalents, that is, at least two researchers in the area carry out the work of scientific corrector.

These scientific correctors review the work anonymously, prepare a more or less exhaustive report on the different sections of the research, indicating whether or not it is relevant for publication. The decision on the publication of a work ultimately depends on the editorial committee or the editor of the journal.

When a researcher decides to publish his results, he must carefully select the newsletter to which he will send the results, taking into account the specificity of the journals. In addition to taking into account the specific topic of research, it is about taking into account the impact that the journal can have when deciding.

Each journal has been assigned an impact index, taking into account the number of occasions in which articles published in other works are mentioned, the importance of the journals that mention those articles, as well as the year in which the journal articles are mentioned. According to these and other data, each journal is assigned the so-called impact index, which is the universal measure to assess the works published in science at this time. The higher the impact index, the wider and more important the journal is.

Therefore, the field of science has focused on publications. In that case, the results are a measure in science for almost every occasion and where they have been published. "Tell me where and how much you publish... and I will tell you who you are and what you are going to get" can be today the summary of the world of science. Research projects are granted based on scientific publications and their impact index, places or research contracts are assigned, you can choose or not go to work, etc. ; at this time in science everything depends on publications.

All this generates a series of problems. Due to the pressure of publication, there are researchers who act too fast and publish the data before checking them to be the first and publish them in the best journal. This is what happened recently in stem cell research conducted by the Japanese institute RIKEN ( stimulus-triggered acquisition of pluripotency). But this is not the only case. The same happened to researcher Hwang Woo-suk a few years ago with research in Korea, which published in 2005 an article on the cloning of human embryos in the journal Science, but other authors could not repeat the essay. And that has already happened with research from other countries.

In the case of STAP cells, an error was detected in this research in February 2014, one month after the publication of the article in the journal Nature. The problem arose when other researchers, after reading the publication, tried to replicate the trials but did not succeed. As has been said, the studies were published in the journal Nature, one of the journals with the greatest impact, and those errors also had an impact on the media.

The advance described in the publication indicated that somatic cells could simply become stem cells, putting them in a state of stress, for example, maintaining a pH lower than the physiological. Despite the withdrawal of the article, the researcher continues to believe in its results and has published in the same journal an even more detailed version of the protocol, with technical tips that had not been included in the previous articles, so that other researchers can replicate the results obtained.

Due to the obvious errors presented by the publication system in high-impact journals, the scientific community criticizes the system, but the impact interests are already so high that it is very difficult to dismantle the system if no other quality measurement system is installed that exceeds it.

In this sense, Randy Schekman, Nobel Prize in Physiology or Medicine 2013, said that "his laboratory will stop publishing research results in journals of greater impact such as Nature, Cell or Science, for understanding that they distort the scientific process". These statements were published in the newspaper The Guardian in December 2013, after learning about the Nobel Prize. According to the prestigious American scientist, the pressure exerted by his publication in these journals makes scientists shorten the path that a serious and reflective research should follow, generating trends and trends in research, beyond scientific progress. "In many cases, journal managers decide what work will be published and they, as non-scientists, look more at the impact of the media than on scientific progress," the Nobel Prize said.

In my opinion, peer review is important, although it also has errors and problems. I think that the fact that the person performing the review acts anonymously means more problems than advantages, since behind this anonymity conflicts of interest can be hidden. A constructive critique based on arguments does not have to be anonymous. It is not necessary to question the quality of the journals that have been used in the last 100 years to publish the main scientific advances. However, the quality measurement system, closely related to the impact index, should be reviewed and, in addition to the impact, take into account other criteria for evaluating research, especially when distributing research funding. In this context, proper management of funds should be of great importance and, although with little funding, a research team capable of obtaining good results and training researchers should be assessed in a tight manner, not only depending on the number of publications and their impact, as in some cases.

On the other hand, when assessing the curriculum vitae of the researchers, one should take into account the place in which the researchers have carried out the research, since the research capacity should not be measured only through the impact of the journals, but also taking into account the environment. A researcher with large infrastructures who works in a research center for material and technical support should be more productive than a researcher who is engaged in research and teaching, for example, or who does not have material or personal support to conduct research.

As with the rest of things in this life, not everything is black or white, but details give it importance. Therefore, it is important to obtain objective and quantifiable measures of research and quality of researchers, but it is more important to look at other factors that better mark the profile of research and researchers. If these criteria are not yet established, it will be time to apply them to try to be more fair.