Using the Basque language in large language models and chatbots

Chatbots capable of answering any question and performing all kinds of tasks have been around for two years, and since then ChatGPT, Gemini, Copilot, Claude and others are everywhere and are widely used. These tools are based on large language models (LLMs). In this article we will explain the path that the Basque language is taking in these models. And we will also explain the operation of chatbots and LLM so that the Basque can understand the difficulties that exist to advance in them, but also because it is important to understand the ubiquitous and the operation of any technology that is increasingly necessary in many fields.

Almost two years ago we wrote in this corner an article entitled The Boom of Creative Artificial Intelligence. And we can’t say that since then the impact of that explosion has diminished; on the contrary, the propagation wave of that boom has been constantly and everywhere. The Artificial Intelligence or Creative AI systems mentioned at the time were able to generate text or image, since then we have seen systems that generate music (such as Suno), as well as video (such as Sora from OpenAI). A lot of new chatbots have appeared: Microsoft's Copilot, Anthropic's Claude, Google's Gemini, Meta's Meta AI, Perplexity, Jasper AI... And there has also been a proliferation of new LLM (Large Language Model) or large language models or new versions of the previous ones: PaLM, GPT-4, Grok, Gemini, Claude... The picture has also changed considerably in free licensing LLMs, with the emergence of OpenAssistant, Mixtral, Gemma, Qwen and, above all, the Meta-LLaM.

LLM or Great Language Model

It is not easy to follow the thread of this soup of names and concepts. But at least it is also convenient and interesting to know what an LLM is, what a chatbot is, and how they develop.

It can be said that for two or three years we have been living in a new era in language and speech technologies, namely the era of large language models or LLMs. LLMs are a type of deep neural network capable, on the one hand, of responding well to many problems that have not been solved so far (automatic generation of texts, answering questions of any kind, writing computer programs...) and, on the other hand, of performing many tasks that were already carried out with other types of deep neural networks as well or better than them (automatic translation, automatic summary...). After all, any textual work can (and in many cases is) now be done through LLMs.

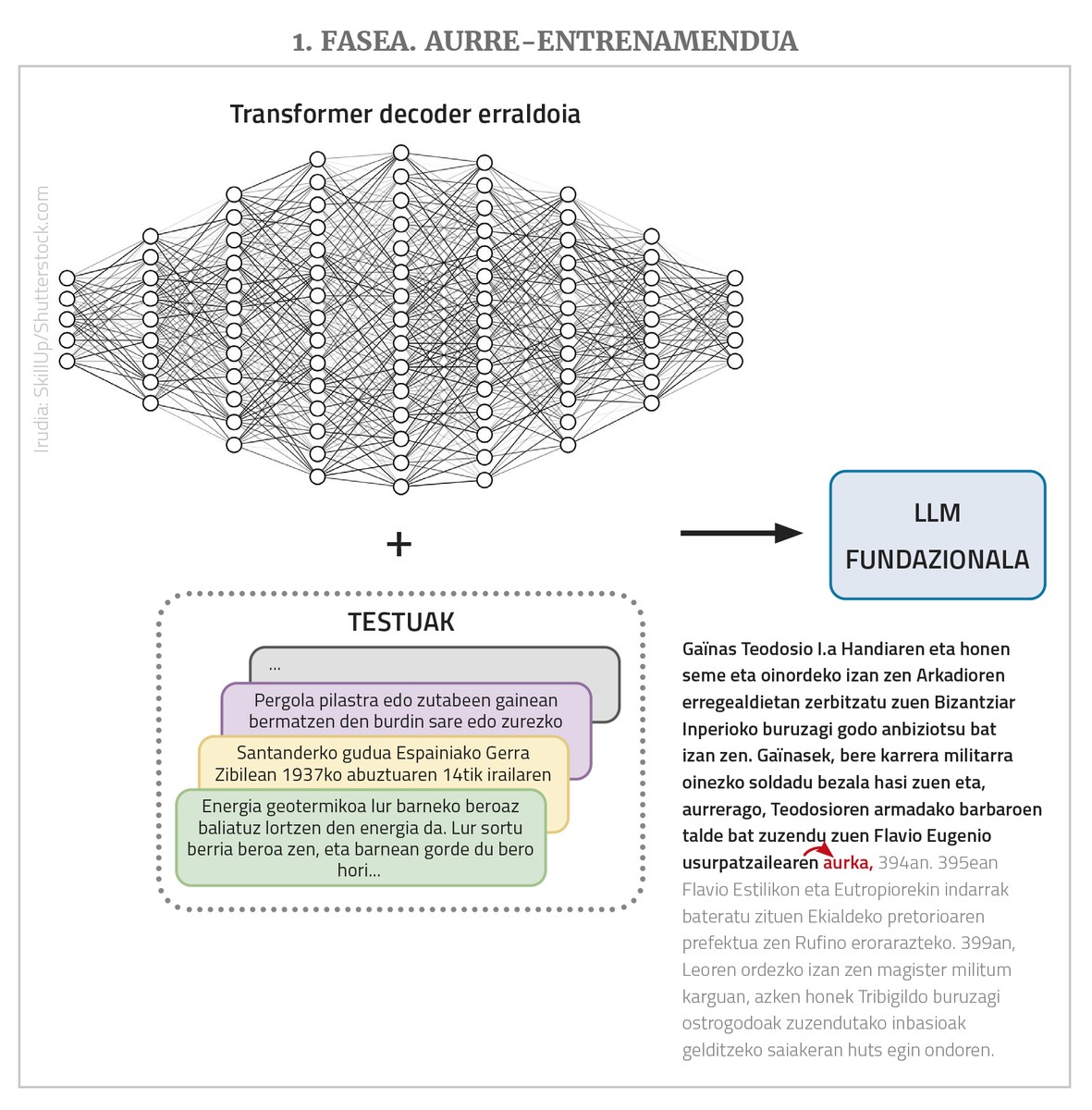

LLMs are deep neural networks, of the transformer type, within which the majority are usually of the so-called decoder class. But beyond the structure, they have several other important characteristics. On the one hand, they are gigantic networks: they have many input nodes and many intermediate layers each with many nodes; the parameters that the link or network has to learn between these nodes are usually billions. On the other hand, they train with empty texts, but with incredibly large amounts of texts, with collections of texts of up to millions of words. Finally, they are usually multilingual, that is, they are trained with texts of many languages and work in all of them (but better sometimes than others, as will be seen later).

The mission of the LLM is, in principle, a unique and very simple thing: to predict the next most likely word given a sequence of words, taking into account the sequences of words you have seen during your training. That is to say, if we give him a sequence of “fourteen distant nuts,” at the entrance, he should return “approaching.” And that's all an LLM does. The fact is that if we then give it “fourteen distant nuts, approach”, it will return “and” if we do it again “four”... And so, through this process called self-regression, we can put them in the creation of long texts or answers. In addition, as already mentioned, they can have a large number of nodes in the input, even a few thousand, which makes it possible to have very long and complex incoming texts or requests (“prompt”, using the term in the area).

In reality, LLMs don’t work with words, but with numbers. And since it takes too many numbers to express all the words in many languages, they work with fragments of words called “tokens”. But to facilitate the explanations, we will say that they take the words.

So, in short, this is an LLM: a huge neural network of the transformer type (usually of the decoder class) that, given a long text, predicts the next word, for which it has been pre-trained with many texts in many languages (we will see later why "pre-training"). LLMs in this basic state are also known as foundational models, GPT (Generative Pre-Trained Transformer), self-regressive language models... Due to the large sizes of the network and its training collection, the texts it generates are usually linguistically correct, appropriate in morphology, syntax and semantics, and generally show great knowledge about the world and other skills.

From LLM to chatbots

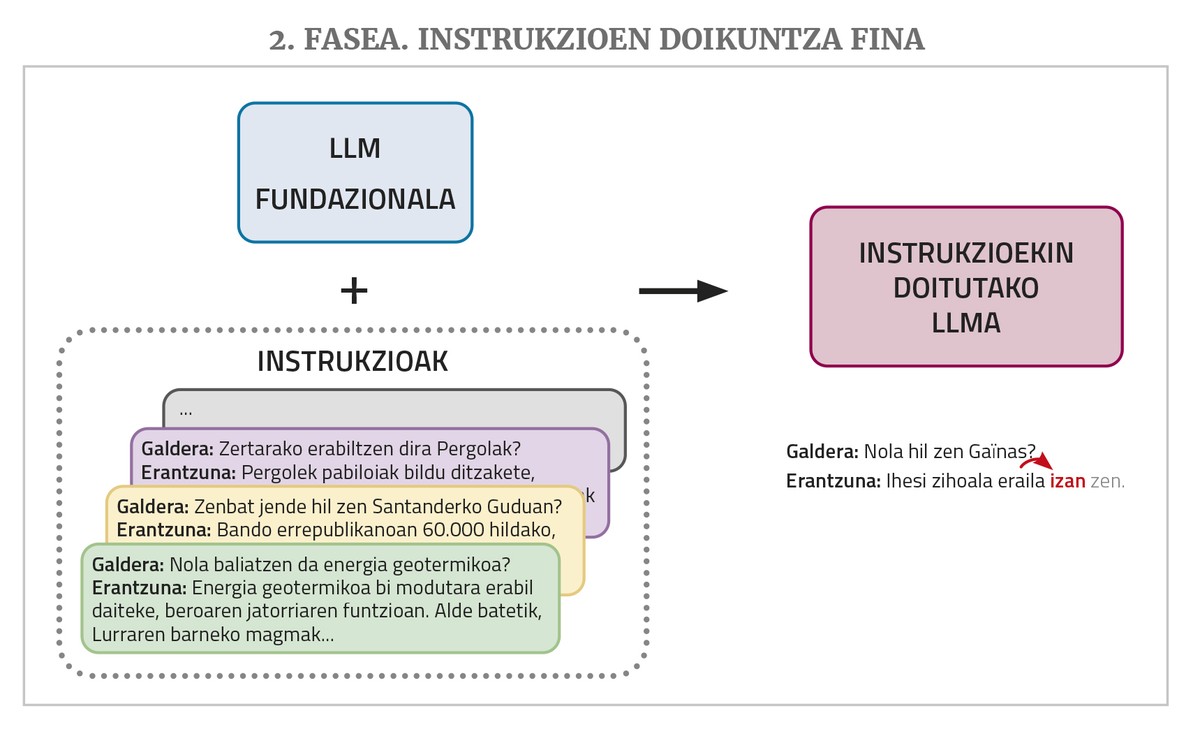

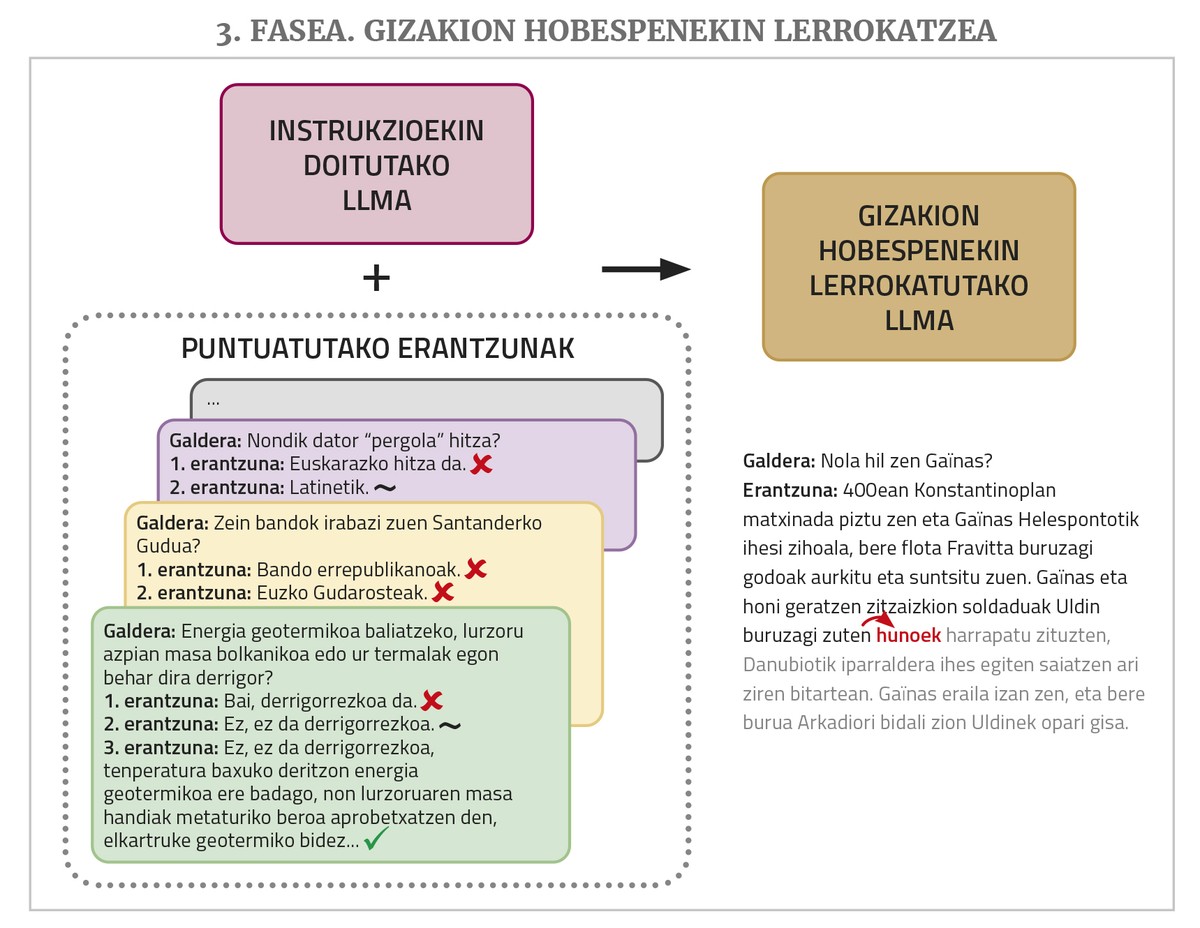

A chatbot like ChatGPT or Gemini has such an LLM at its base, but still needs two more training steps: fine tuning of instructions and alignment with human preferences.

An LLM, as mentioned above, gives continuity to a text that is given to it in the input. But chatbots are given questions or requests to perform a task. In response to these, the LLM may respond well if you have seen similar question-and-answer or request solutions in training texts. But there aren't many of these in training corpusas. For this reason, in order to improve the performance of chatbots, the LLM requires another training phase known as instruction fine-tuning. For this training, it is necessary to complete a collection of instructions for the different types of tasks for which it is desired to carry out chatbots, i.e. examples of pairs of request solutions: questions with answers, requests for summaries, requests for corrections of incorrect texts with corresponding corrections, requests for translations, requests for generation of texts with texts... In this way, the LLM learns how to respond appropriately to these types of requests.

Finally, for it to work better, a training phase called alignment with human preferences is needed. The LLM is asked to perform several tasks, asking for more than one possible answer for each one, and then several people evaluate these answers. These punctuated responses are used in this last training, which allows the chatbot responses to be better aligned (“aligned”, using the term in the field) with the desires or logic of human beings. To perform this step, there are different techniques such as Reinforcement Learning from Human Feedback (RLHF) or Direct Preference Optimization (DPO).

Building your own LLMs in Basque

We’ve said above that LLMs or chatbots are multilingual; in fact, they are generally trained with texts in several languages. But they don’t work the same for all languages, because the number of texts seen during training is not the same: the vast majority of texts are in English, those in other languages (even the other major languages) are much less, and not to mention those in smaller languages. However, even if there are these differences, an LLM can achieve a relatively good learning of all languages due to the property known as transfer learning. It has been shown that neural networks have this property, which allows them to use the knowledge acquired in one or several domains or languages in some way to learn another domain or language with less data; in the case of languages, in addition, less data will be needed if they already dominate a language of the same family (similar to humans). So, although in that previous article we said that ChatGPT did quite well in Basque but still had a lot to improve, moving from GPT 3.5 to GPT 4 has had a significant improvement and it must be admitted that it does very well in Basque.

Despite this, for several reasons (technological sovereignty, the fact that the future of our language is not in the hands of US multinationals, the loss of privacy that involves the use of the tools of technological giants...), it is advisable to develop LLM in Basque or chatbots in Berton, and that is what we are doing in some organizations of the Basque Country.

Building an LLM in Basque from scratch, however, is not an easy task. To make them work well, you have to train them with millions of words, and there is not so much in Basque at all. A sufficient number of texts can be obtained by using machine translation, with which we have also carried out pilot tests, but the results have not been satisfactory enough. In addition, the full training of these giant nets requires very powerful machines, and it sounds incredibly long. This makes it very expensive and therefore not feasible.

For this reason, the usual way to go is to take a free, pre-trained foundational LLM and fine-tune it so that you can learn Basque better. In short, this adjustment is the continuation of training using Basque texts, which is why it is also called continuous pre-training or continual pre-training. The fact is that this pre-trained LLM already knows that it has other languages and general knowledge and other skills and, thanks to transfer learning, does not require as much text or training time to learn Basque. In addition, if desired, techniques such as LoRA (Low-Rank Adaptation) can be used to require only a part of the entire network to be adjusted, thereby greatly reducing memory requirements.

In the most recent works of this route, the LLaMa developed by Meta and freely licensed is used. The HiTZ centre of the UPV/EHU, for example, based on LLaMa 2 and continuing its training with the EusCrawl collection of texts, launched the Latxa Basque LLM in January last year, which was later adapted and improved in April with a larger corpus of texts. Latxa obtained better results than any other LLM in the Basque proficiency test (evaluated with the preliminary tests of the EGA), and in the questions or general tasks it was only surpassed by GPT 4 (with some evaluation sets that were prepared for general questions, reading comprehension questions and opposition questions).

At our Orai NLP Technologies centre in Elhuyar, we took the LLaMa 3.1 and adjusted it with the Great Camp corpus (the largest collection of free Basque texts compiled by Great Camp Orai, of 521 million words), resulting in the Llama-eus-8B, which was presented in September last year. Among the agile foundational models in Basque (less than 10 billion parameters), it has achieved the best results in all types of tasks, and in some tasks it also gives better results than much larger models.

And the Basque chatbots?

In view of these results, one may think that we already have a kind of ChatGPT in Basque created in Berlin. But as we have seen, the results obtained by native models for many types of task requests in Basque do not yet reach the level of GPT4; and above all, the results are significantly lower than those obtained with English.

The reason for this is explained above: Obtaining a functional chatbot from an LLM also requires fine tuning of instructions and training phases to align with human preferences. It has been observed that one of the main reasons for the good performance of commercial chatbots are the data sets used in these two steps, very large and of high quality. And these types of data (batches of application solutions and batches of responses assessed by people) - unlike texts which can be obtained in relatively large numbers automatically from the pre-training network - have to be generated manually by people, which is very expensive. The tech giants allocate a lot of money and manpower to this, and they don’t let these data sets go free. There are not enough resources to create these. There are some free data sets of this kind, but they are not big enough, they are not in Basque... Even without fine-tuning the instructions, you could be able to do certain tasks better by giving one or more examples of the task you want to do in the question itself (this technique is called prompt engineering or in-context learning), but the results are not as great as those obtained with the adjustment.

Because of all this, there is still a lot of work to do to have our own functional chatbot. That doesn't mean we're not doing it. At the Orai Centre, for example, we have some work in progress. For example, beyond knowing Basque and responding to general requests in Basque, we have analyzed whether LLM and chatbots have knowledge about the Basque Country and Basque culture, generating a set of data to evaluate it and making it free. The data set is in English (after all, the chatbots of large companies work better in English, and it was intended to evaluate their knowledge of Basque issues, not their Basque proficiency), and questions have been asked in English to the chatbots. The conclusion has been that they have a strong cultural bias, and they only guess about 20% of the questions of Basque subjects on average. By testing different techniques in free foundational models, we have tried to improve this and the sessions have been successful, increasing the success rate to about 80%. The HiTZ has also carried out similar work and evaluation.

We also have the bias of the LLM in Basque at the Langai Orai. We have adapted the BBQ data set used to measure LLM bias to the Basque context and made it public with a free license called BasqBBQ. Using this, the biases of the Basque LLMs (Latxa and LLaMa-eus-8b) have been measured and compared with those of the original LLaMa model on which they are based. And it has been observed that models adapted to the Basque language do not have a greater bias, but the other way around.

Finally, we have also carried out the first experiments on fine tuning of instructions and alignment with human preferences. To this end, we have acquired a series of freely available data sets in English, both of the instructions (of the application solution type) and of the punctuated answers, and after translating them into Basque by automatic translation, we have passed these two additional training phases to our foundational model LLaMa-eus-8B. In doing so, we have built the first Basque chatbot to go through all the training phases. And we have compared the results with those of the LLaMa chat model of similar size extracted by Meta, taking advantage of its private data, adjusted with instructions and aligned with human preferences, and we have seen that ours works much better in these tasks of creation in Basque. However, the quality of the results still does not reach what these models achieve in their tasks in English, and less in the more closed model like ChatGPT. After all, as has already been said, the open data sets for instruction and DPO training are not as large as those of the technological giants, and the fact that they have been translated by machine translation also has some impact.

All these tasks and many more will still have to be done to be one of the most functional chatbots in Basque. But even when one of these is achieved, making it available in a way that Basque society can use can be a problem. In fact, just as it is expensive to train these giant neural networks, it is also very expensive to have them up and running in order to be able to use them, since they require very powerful machines. The US tech giants that offer the most successful commercial chatbots are doing so because they are at the forefront of this revolution and gain market share, but they are losing an enormous amount of money. These models are not cost-effective and, on the other hand, there is also the damage that large machines of this type do to the environment. Therefore, optimization is as important as improving the results of all these chatbots, that is, achieving the same results by taking advantage of more economically and ecologically sustainable neural networks.

And it is said that it is precisely this way that the open source LLM of the Chinese company DeepSeek, DeepSeek-V3, became popular at the end of January. The downloads of the app to use it surpassed those of ChatGPT in the USA, which offered comparable results to hers at a much cheaper price. This caused an earthquake in the stock market quotes and future aspirations and expectations of AA and chip companies in the United States. But in addition to the high quality of the results, the fact is that, forced by the U.S. embargo of chips placed on China, they have had to look for ways to develop LLMs on less powerful chips and have apparently invented them.

Taking advantage of several variants that have not been used by others to date in the structure and training of the LLM, the company claims that the cost of training the DeepSeek-V3 has been 6% of that of the GPT-4 and that it has required only 10% of the training energy of the LLaMa 3.1. The cost of offering the model as a service is also much lower and so, although it is offered at a lower price, the only one that does not lose money is the EI. That is, the results of DeepSeek are equivalent to more closed models like ChatGPT, better than open source models like LLaMa, it is open source, and it is said to have less than a tenth of the energy need (and therefore the cost) of the other models. It will soon be seen if the suitability of this path is confirmed, if the developers of the other models also take this path, and if it is also useful for us to be able to develop and offer our own Basque models more quickly and cheaply.