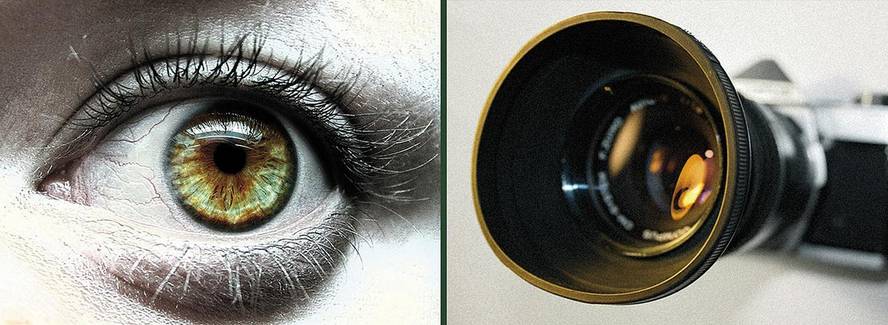

Eye and camera, camera and eye

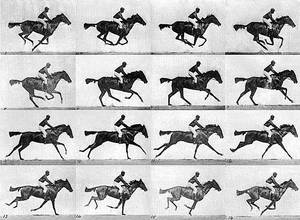

In 1872 there was an intense debate in a Californian horse. The question was whether horses got to have all four legs in the gallop in the air; some fans said yes and others no, but at first glance it was impossible to clarify the matter safely. The only way to illuminate was through a camera that has the ability to freeze the images, while the eyes did not. They hired photographer Eadweard Muybridge. However, it was not easy to exploit this capacity in the cameras of the time. Muybridge spent six years organizing a six-camera system to photograph the horse's movement sequence, but in 1878 he achieved the photographic series entitled The Horse in Motion, and it became clear that yes, with all four feet in the air at a time of the cycle. The camera saw what the eye could not see. The result had great repercussion and the photographic series became famous, for example, by the journals Scientific American and La Nature (currently La Reserche).

Three colors

Basically, if the camera is a copy of an eye. "The basic operation is that the light enters through a hole and that ray of light is printed somewhere," says company expert Juantxo Sardon Pixel. In the same eye, the inlet hole of the eye is the pupil and that of the camera is objective. The width of the hole is variable in both cases, thanks to the iris and the diaphragm in the chamber.

And the place of image printing is the retina in the eye and a photosensitive plate in the camera. "In analog cameras it was a photosensitive negative (film) and in digital cameras a sensor, until now CCD sensors were common and now new sensors, called CMOS, have emerged." The CMOS is a cheaper and faster sensor that is multiplying in today's cameras, but basically all digital sensors treat light as the eyes: they distribute it in signals of three basic colors - red, blue and green - that convert it into electricity. The CMOS sensor performs conversion through a sophisticated process. It distributes light in three colours and it is the sensor itself that digitalizes this information without the need for a new chip. The mechanism of the retina of the eye is even more specialized: in photosensitive cells (cones and rods) a molecule such as vitamin A, retinal, and a protein, opsin, work together to transform light into electricity.

These are two ways of doing the same, but the reference is the human eye. The three basic colors are those of the rays of light that receive the cones of the retina. Therefore we call them basic, from their combination arise all the colors that can have the light, but in reality it is not a physical process. The combined colors are a product of the human brain. However, the camera adapts to the operation of the eye and converts each color it receives into a combination of green, blue and red light that digitizes this combination.

One second, one hundred images

Taking an image takes time, not a sudden process. The light that enters the eye is received by the retina, which is saturated to complete the image. For this it takes time, about one-hundredth of a second. Once this image is finished, start taking the next image. Finally, the eye can take 100 images per second.

The camera can be faster. Sardon has worked with them. "Among the fast cameras, one of the most prestigious is the Phantom brand," he says. "You can record 2,000 frames per second. If we reproduce the engraving in 25 frames per second, we get the effect of slow motion, of very high quality. It has a special plastic effect."

The fast camera is also a powerful scientific research tool. From this point of view, the camera is better than the eye, as, as in the case of Muybridge's horse photos, it captures images that do not catch the eyes. High speed video allows to observe physical processes such as the expulsion of spores by the brain, the mixture of liquids inside a container, explosions and combustion or the flight of bees.

Options are endless; what seems like a sudden event is a process of more than one step. The process of transforming a grain of corn into popcorn is a good example: at first the skin of the grain cracks, then the white material of the palometa expands and expels from the crack, then another hole in the skin may open and a new lump of white matter expands that will shoot all the popcorn into the air.

However, the quick capture of many images has a compensation, since for each frame the camera has less time. This means, on the one hand, that the light input in the camera is low in each frame and that the camera must clarify much what is going to be rolled and, on the other, that as the speed increases, the size of the frame is smaller. They are frames with fewer pixels. "A phantom [per second] occupies 2,000 frames at HD size in 1920 x 1080 pixels. But in 4K format (4096 x 3072 pixels), the largest format used in digital cinema, records 500 frames. However, manufacturers are constantly improving these numbers," says Sardon.

Panoramic eye

The limit of the eye has the same origin. It is a very powerful organ, but it cannot take more than 100 images per second, since each image it receives contains a lot of information. On the one hand, it takes images of hundreds of megapixels, and on the other, that image is wider than that taken by a wide angular objective: the eye sees an angle of more than 140 degrees and the large angles of about 120 degrees (although there are objectives that reach 180 degrees). It is true that the eyes, and especially the brain, do not equally process the center of the image and the two parts, but receives much information that "slows down" the work of the eye.

In addition, the eye does not change optics, that is, it always works with the same objective, with the lens. It is a bubble shaped element inside the eye and each wall behaves like a convex lens. It is subject to the eye and through muscles its geometry and distance to the retina are modified, which is the movement that focuses the image. Unlike a camera lens, the lens material is constantly renewed and, at least in a young man, is a flexible component. As for the lens, therefore, the strategy of the eye is to operate with a single "target" of high quality, which makes the lens much more sophisticated than any artificial objective made by man.

The camera, for its part, uses a contrary strategy, to which different objectives are added for each type of image that will take. The advantage of the camera lies, according to Sardon, in that the mere change of lens adapts to the image you want to take. "To record a short, for example, we changed the camera optics on each plane. In fact, by doing things like ikzio, each plane is a picture," he says.

The camera can also work without optics. In fact, the first pictures of the story were made without objectives. "The cameras were black cameras with a small light inlet hole and a photosensitive material inside. There is a formula to calculate the diameter of the hole: it has to have so much hole to project it into a support of a certain size at a certain distance," explains Sardon. In this way, it opened the hole and the light created the image in the photosensitive material. "There are still people taking pictures like that. The most famous is Ilan Wolff."

The photos taken are very limited. The depth of the area or angle that the camera inserts in the image cannot be controlled, zoom effects or anything. But it has a favorable characteristic: since there are no lenses, there are no obstacles to light. The lenses of conventional cameras are not completely transparent, as an important parameter of lens quality is transparency. The parameter that measures this transparency is the number f. In all objectives the number f of this piece is defined, the better the smaller the f. And the reference of the eye becomes evident again: the minimum value it can take is f = 1, the value of the cornea and the lens of the eye. Some cameras can see what the eye cannot, but in the materials there is nothing like the eye.